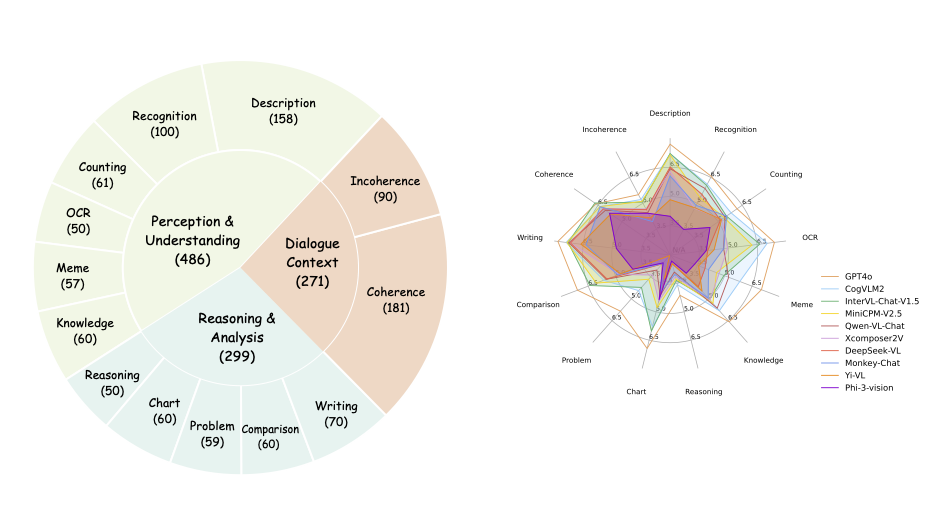

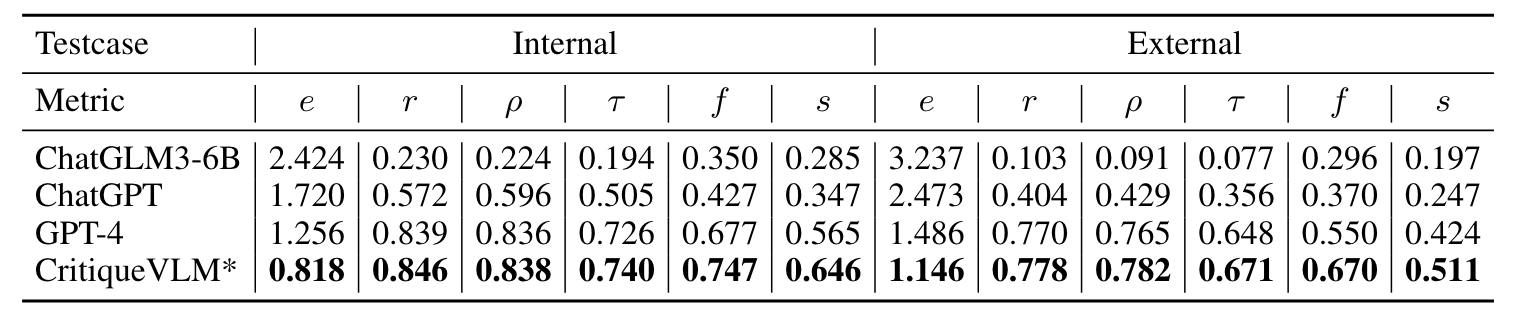

Evaluation results on AlignMMBench. The "Ref." column indicates the relative ranking of these models on opencompass, dominated by primarily English benchmarks.

| Models | Ref. | Avg | Perception & Understanding. | Reasoning & Analysis | Context | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Des. | Rec. | Cou. | OCR. | Mem. | Kno. | Rea. | Cha. | Pro. | Com. | Wri. | Coh. | Inc. | |||

| 7.69 | 6.56 | 6.18 | 7.4 | 7.02 | 6.58 | 4.13 | 6.94 | 5.85 | 7.09 | 7.81 | 6.63 | 5.5 | |||

| CogVLM2 | 5 | 5.86 | 7.19 | 6.11 | 5.81 | 7.03 | 5.83 | 5.82 | 3.57 | 5.79 | 4.44 | 5.65 | 7.38 | 6.38 | 5.22 |

| InternVL-V1.5 | 2 | 5.73 | 7.21 | 6.04 | 5.52 | 6.64 | 4.96 | 5.06 | 3.34 | 6.02 | 4.22 | 6.41 | 7.28 | 6.70 | 5.07 |

| MiniCPM-V2.5 | 3 | 5.43 | 7.15 | 5.32 | 5.38 | 6.24 | 4.55 | 5.32 | 3.27 | 4.78 | 3.66 | 6.06 | 7.34 | 6.38 | 5.11 |

| Qwen-VL-Chat | 8 | 5.13 | 6.43 | 5.86 | 5.43 | 4.77 | 5.22 | 5.65 | 2.90 | 4.15 | 3.03 | 5.52 | 7.24 | 6.04 | 4.46 |

| XComposer2V | 4 | 4.98 | 6.29 | 4.71 | 5.23 | 4.98 | 4.69 | 5.09 | 3.14 | 4.43 | 3.37 | 4.81 | 7.17 | 6.19 | 4.67 |

| DeepSeek-VL | 7 | 4.75 | 6.52 | 5.52 | 5.14 | 3.95 | 3.92 | 4.18 | 2.46 | 3.98 | 2.61 | 5.47 | 7.16 | 6.17 | 4.63 |

| Monkey-Chat | 6 | 4.71 | 6.06 | 4.88 | 5.53 | 4.78 | 4.11 | 4.95 | 3.03 | 3.94 | 2.58 | 4.88 | 6.35 | 6.17 | 3.94 |

| ShareGPT4V | 10 | 4.31 | 5.24 | 4.49 | 4.84 | 3.89 | 3.61 | 4.68 | 2.44 | 3.65 | 2.24 | 4.51 | 6.32 | 5.91 | 4.16 |

| Yi-VL | 9 | 4.24 | 4.84 | 4.74 | 5.21 | 3.28 | 3.57 | 4.47 | 2.46 | 3.27 | 2.04 | 4.72 | 6.62 | 5.71 | 4.16 |

| LLava-v1.5 | 11 | 4.22 | 6.02 | 4.55 | 4.48 | 3.82 | 3.67 | 4.84 | 2.44 | 3.71 | 2.23 | 4.81 | 6.40 | 4.54 | 3.40 |

| Phi-3-Vision | - | 3.90 | 3.99 | 3.49 | 4.49 | 3.87 | 3.34 | 3.25 | 2.32 | 4.36 | 2.54 | 4.05 | 4.78 | 5.78 | 4.42 |

| InstructBLIP | 12 | 3.38 | 3.33 | 3.77 | 4.12 | 3.51 | 2.61 | 3.25 | 1.90 | 3.52 | 1.42 | 3.28 | 3.98 | 5.50 | 3.78 |